This past week, there has been much issue with the fact the iPhone and Android phone stored the location of these phones in the clear. Another possibility of geolocation from one of these smartphones or any webcam come from their ability to locate where a photo was taken by correlating it with satellite imagery or correlating it with others. Two projects along those lines come to my mind: Webcam Geolocalization and IM2GPS. The first technique uses fixed webcams, but I think eventually it could be extended to mobile cameras. The second technique uses Flickr. These results can only be created because there are many cameras and webcams, i.e. the sensor network needs to be dense in some fashion. Let us note that 1 billion cameras will be sold in phones this year. One can definitely think of this sensor network made out of these cameras as a way to gather meteorological information and more...

From the respective project's websites:

1. Webcam Geolocalization

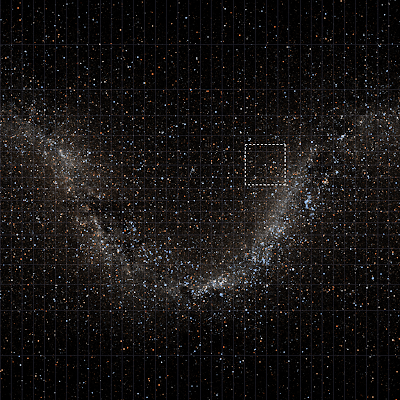

It is possible to geolocate an outdoor camera using natural scene variations, even when no recognizable features are visible. (left) Example images from three of the cameras from the AMOS dataset. (right) Correlation maps with satellite imagery; a measure of the temporal similarity of the camera variations to satellite pixel variations is color coded in red. The cross shows the maximum correlation point, the star shows the known GPS coordinate.OverviewA key problem in widely distributed camera networks is geolocating the cameras. In this work we consider three scenarios for camera localization: localizing a camera in an unknown environment using the diurnal cycle, localizing a camera using weather variations by finding correlations with satellite imagery, and adding a new camera in a region with many other cameras. We find that simple summary statistics (the time course of principal component coefficients) are sufficient to geolocate cameras without determining correspondences between cameras or explicitly reasoning about weather in the scene.

The relevant paper is: Participatory Integration of Live Webcams into GIS by Austin Abrams, Nick Fridrich, Nathan Jacobs, and Robert Pless. The abstract reads:

Global satellite imagery provides nearly ubiquitous views of the Earth’s surface, and the tens of thousands of webcams provide live views from near Earth viewpoints. Combining these into a single application creates live views in the global context, where cars move through intersections, trees sway in the wind, and students walk across campus in realtime. This integration of the camera requires registration, which takes time, effort, and expertise. Here we report on two participatory interfaces that simplify this registration by providing applications which allow anyone to use live webcam streams to create virtual overhead views or to map live texture onto 3D models. We highlight system design issues that affect the scalability of such a service, and offer a case study of how we overcame these in building a system which is publicly available and integrated with Google Maps and the Google Earth Plug-in. Imagery registered to features in GIS applications can be considered as richly geotagged, and we discuss opportunities for this rich geotagging.

2. IM2GPS

IM2GPS: estimating geographic information from a single image by James Hays, Alexei Efros. The abstract reads:

Estimating geographic information from an image is an excellent, difficult high-level computer vision problem whose time has come. The emergence of vast amounts of geographically-calibrated image data is a great reason for computer vision to start looking globally — on the scale of the entire planet! In this paper, we propose a simple algorithm for estimating a distribution over geographic locations from a single image using a purely data-driven scene matching approach. For this task, we will leverage a dataset of over 6 million GPS-tagged images from the Internet. We represent the estimated image location as a probability distribution over the Earth's surface. We quantitatively evaluate our approach in several geolocation tasks and demonstrate encouraging performance (up to 30 times better than chance). We show that geolocation estimates can provide the basis for numerous other image understanding tasks such as population density estimation, land cover estimation or urban/rural classification.

Other relevant sites:

What Do the Sun and the Sky Tell Us About the Camera?

[

[ Currently, FNET collects data from approximately 80 FDRs located across the continent and around the world. Additional FDRs are constantly being installed so as to provide better observation of the power system.

Currently, FNET collects data from approximately 80 FDRs located across the continent and around the world. Additional FDRs are constantly being installed so as to provide better observation of the power system.